I n my previous CMT post, I confided a genuine ‘worry’ about the perishability of computer and electroacoustic compositions. And people laugh at this.

I n my previous CMT post, I confided a genuine ‘worry’ about the perishability of computer and electroacoustic compositions. And people laugh at this.N otwithstanding the half-jesting comment above that delights in the fact that computer/electroacoustic works may become unperformable due to technology change, and notwithstanding that some musical works are written deliberately to be topical/of-a-time/perishable. They do not aspire to 200-year or 500-year relevance. Further notwithstanding that some composers’ goal is such prolific runtime indirection and aleatorics that there is no possibility of ‘rehearsals’ in the conventional sense, only performance—and ad hoc ‘thrownness’. Notwithstanding the fact that ‘you-shoulda-been-there!’ installation art and ‘one-time happenings’ may be a legitimate aim for some.

N otwithstanding these things, it is sad that works’ performance life ends needlessly, prematurely. Sad when all that is left of them is a recording or two, and no one will ever again hear them performed ‘live’, interpreted by different performers and realized in different settings than the ones on the recordings. Sad unless the expressive intent is to make an object-lesson of senseless carnage, waste, loss.

S orry, but a major facet of the hideous perishability of present-day computer music has to do with the fact that most musicians and composers do not have—nor do they have much desire to acquire—adequate expertise in engineering and fluency in refactoring code. On the one hand they will spend decades mastering multiple instruments and acquiring skill in orchestration, but on the other hand they will not spend a moment acquiring or maintaining skills in engineering that are needed to support preserving their achievements as computer musicians and composers. Boggles my mind.

S o what kinds of software tools and environments, if any, might be adequate for composer/users who lack technical knowledge in programming and software engineering, to enable their works to be performable ‘live’ 20 or more years from now, on different hardware and software than they were composed on? Or, given that computer and electroacoustic compositions involve multiple, complex, heterogeneous embedded (pervasive, ubiquitous) and parallel computing systems, is it even realistic to think that a ready-made end-user integrated development environment (IDE) is even feasible to create—one that would effectively immunize such musicians and their compositions against the ravages of time and technology obsolescence?

M y answer, as one who has lived for 25+ years as a professional software developer in a U.S. health informatics firm whose current-generation of applications comprise more than 37 million lines of sourcecode, is “I do not think so.” I do not think that any tool can comprehensively automate the porting and refactoring, now or ever.

I nstead, I believe that composers who want their works to remain performed and performable will either (a) have to acquire some skills of a code-refactoring engineer and suck it up and spend a modest part of their creative hours doing actual refactoring work, or (b) outsource those refactoring tasks to service contractors who do have those skills.

I n the (b) case, the composer needs to be knowledgeable enough about refactoring to be able to perform quality-assurance checks, to see that the contractor has done the job right, and to be sure that the new version does accurately reproduce the aesthetic choices of the original. Regression testing!

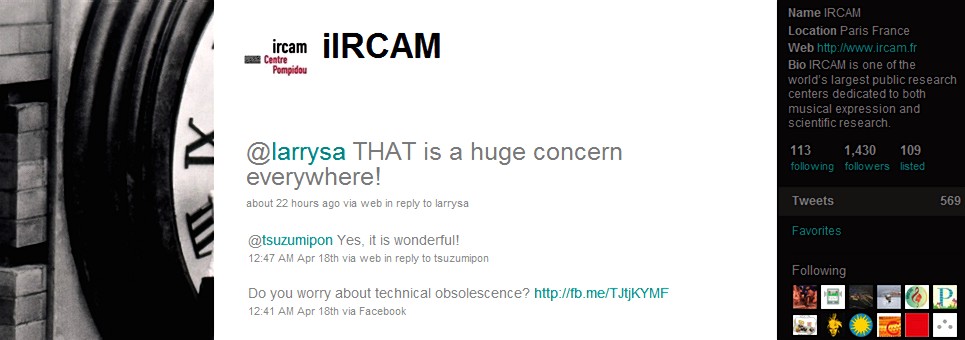

T he “Oh, yes, it [obsolescence, no refactoring, perishing] is wonderful!” quip on the iIRCAM twitter feed (jpeg above) [backhandedly?] feeds a kind of ego-centrism and sensation-mongering. I would love to think it’s harmless snark, a carefree “Here today, gone tomorrow, fine by me!” answer. Probably, that’s all that it is.

B ut the reality is, there’s a lot of remarkable, beautiful music—the result of countless hours of effort and musicianly skill—that today is being allowed to go headlong toward oblivion, all because nobody cares enough to engineer it in such a way as to prevent that. The book links below provide some useful sources that can guide you—that can enable you to learn how to do refactoring of your own compositions to future performance platforms, or to hire somebody else to do this.

Y es, some composers, by their own choosing, will simply decline to preserve their work, or preserve it only temporarily. Sort of like Damian Hirst and his infamous shark in formaldehyde or rotting copulating cow and bull. “Posterity? Not my problem! I won’t be alive then!” That’s fine. Being a Damian Hirst is not a disgrace. Transience is even an enviable fate. I like the idea of cremation, for example. I understand the wish to become atoms, dust.

B ut if you don’t mean to be a Damian Hirst, though, then implementing your version-to-version ‘uplifts’ changes without having to manually rewrite each and every data structure and interface design requires support by another goal: Clean separation and ‘pluggable’ pattern-based interfacing. If one has a synthesis algorithm that plays a particular soundfile or patch in a certain way and with certain timing and through certain channels, then a refactoring should allow that soundfile or patch to be accurately and consistently rendered in the new configuration, through the same or equivalent channels and with the same or indistinguishably different timing and processing, without having to manually reverse-engineer and manually specify each and every detail on the new config.

A ll of this requires an ontology for mapping synonymy of synths and waveform samples and libraries and channels/layers (and modules fan-ins and fan-outs topology, and inter-platform clock timedivision-resultion, and inter-platform sampling-freq and bit-depth skew, and EQ and reverb and other processing idioms, frequencies and pitch-bends, vocoder and compander FX, attack/decay envelopes, and timing, etc.) on different configs…

M ight be done in OWL/RDF or other environments, but such ontology does not yet exist so far as I am aware. Current-generation mapping paradigms range from 2D graphical ‘wire-patching’ of Max/MSP and Pd, to primitive source-code ‘text-based patching’ as in SuperCollider or ChucK, to WorldofWarcraft (and others’) OpenGLES patching of urMus elements, to Marsyas’s Qt4-based patching.

A s things are right now, you do in-line modding of your sourcecode, encapsulating the syntactic result of your work, but not the behaviors of your work. By contrast, true refactorings capture the behaviors and port those behaviors correctly to the new platform(s), not just the syntactic synonymy.

M any refactors that I do in Java/Eclipse are manual, really tedious. There is a refactor to convert an anonymous datatype to nested, and then nested to top-level, but there is nothing to convert a top-level type to anonymous (or nested). Lots of cut-and-paste required. Ultimately, you write your own source-modifying refactors. Chaining other refactors together into a script is only a start.

T ake Max/MSP, for example. Max is a data-flow procedural programming language in which programs are called “patches” and constructed by connecting “objects” within a “patcher”, the 2D Max/MSP GUI IDE. These objects are dynamically-linked libraries, each of which may receive input (through one or more “inlets”), generate output (through “outlets”), or both. Objects pass messages from their outlets to the inlets of connected objects.

M ax/MSP supports six basic atomic data types that can be transmitted as messages from object to object: int, float, list, symbol, bang, and signal (for MSP audio connections). A number of more complex data structures exist within the program for handling numeric arrays (table data), hash tables (coll data), and XML information (pattr data). An MSP data structure (buffer~) can hold digital audio information within main memory. In addition, the Jitter package adds a scalable, multi-dimensional data structure for handling large sets of numbers for storing video and other datasets (matrix data). Max/MSP is object-oriented and involves libraries of objects that are linked and scheduled and dispatched by a patcher executive. Most objects are non-graphical, consisting only of an object’s name and a number of arguments/attributes (in essence class properties) typed into an object box. Other objects are graphical, including sliders, number boxes, dials, table editors, pull-down menus, buttons, and other objects for running the program interactively. Max/MSP/Jitter comes with hundreds of these objects in the standard package; extensions to the program are written by composers and third-party developers as Max patchers (e.g., by encapsulating some of the functionality of a patcher into a sub-program that is itself a Max patch) or as objects written in C, C++, Java, or JavaScript. And those, too, require refactoring from time to time, just like your compositions do.

R efactoring can change program behavior. For example, a ‘PullUpMembers’ refactoring changes this Max DSP method that previously was encapsulated in and extends a class ... pulls it up into the class that was previously its parent: that is the example that the A-to-B refactoring above illustrates.

R efactoring can change program behavior. For example, a ‘PullUpMembers’ refactoring changes this Max DSP method that previously was encapsulated in and extends a class ... pulls it up into the class that was previously its parent: that is the example that the A-to-B refactoring above illustrates.B ut PullUpMembers refactoring causes concurrency bugs when it mishandles the ‘synchronized’ method. When that happens, methods in the parent and child can be time-interleaved in arbitrary ways by the operating system, different from what was the case in the original composition. It messes up the sound. You don’t want that. You need to take special engineerly steps to prevent it. You can’t just ‘plug-and-play’.

M oveMethod refactor methods can cause deadlock and starvation, with mutex and lock conflicts. Other refactoring can give rise to other sorts of bugs. The up-shots of this are that (a) creating an automated-unsupervised refactoring engine for any of the current-generation computer composition IDEs and the scripts/patches they emit would be incredibly difficult and nobody has yet done it [because there’s not enough money in it to make a viable business-case], and (b) doing it auto/semi-supervised or, worse, manually is a tough slog, mitigated only by some considerable skill and fluency in the tools and coding, hence, my remark above that it ain’t gonna happen unless you become a decent engineer as well as musician.

A ny program feature without an automated test simply doesn’t exist.”T he video game Half-Life 2 (HL2) uses realtime sound event calls to Pd as its sound OS. Game events are sent from HL2 to the Open Sound Control (OSC) engine, which triggers the sound by Pd via the network. Pd has the sample data and the specified sound-behaviors in-memory and can be instructed to modify those in realtime (perhaps by other game events and other processing of those) without recompiling. WorldofWarcraft uses Lua and Max/MSP.

— Kent Beck, Extreme Programming Explained, p. 57.

T hink about it: if composers who are writing and maintaining video game scores across multiple years’ releases and across various gaming platforms do refactorings of their compositions out of economic necessity, why not you? Is your music any less deserving of future live performances than video game music?

W ould IRCAM or conservatories with computer music curricula please add some courseware by engineers who are knowledgeable about refactoring? Save the Music!

T he secret to creativity is knowing how to hide your sources [and efficiently refactor them in perpetuum].”

— Albert Einstein, amateur violinist.

- Fourth Workshop on Refactoring Tools (WRT'11), 21-27-MAY, Honolulu, Hawaii (ICSE conf)

- Expo'74, 14-15-OCT-2011, NYU Polytechnic, Brooklyn, NY; $295 2-day early registration [Max/MSP sharing]

- L’Œil de la Médiathèque de l’IRCAM search 'refactor' [basically, "crickets", as of 19-APR-2011]

- Cycling74, Max/MSP, Jitter, related products

- Max/MSP user-authored toolbox

- MaxObjects.com

- Compusition (Adam Murray)

- Le-son666 Java plugin to write Max/MSP Java code referencing Pd objects (Pascal Gauthier)

- Miller Puckette page at UCSD Dept of Music [open-source Pd download]

- Pd docset

- Essl G. UrMus: An environment for mobile instrument design and performance. ICMC 2010.

- Klemmer S, Landay J. Toolkit support for integrating physical and digital interactions. Hum Comput Interact 2009;24:315-66.

- Schafer M, et al. Correct refactoring of concurrent Java code. IBM Research Technical Report, 2010.

- Spring J, et al. Reflexes: Abstractions for integrating highly-responsive tasks into Java applications. ACM Trans Embedded Comput Sys 2010;10(4):1-29.

- Vervaere M. A language to script refactoring transformations. Oxford D.Phil. Dissertation/Microsoft Research Technical Report, 2008.

- LuaEdit IDE

- Lua-Users.org

- LuaEclipse plugin for Eclipse

- OpenGLES

- urMus (Georg Essl at Univ Michigan Comp Sci Dept)

- Marsyas (Graham Percival & George Tzanetakis)

- RedGate SQLprompt refactoring tool

- Ambler S, Sadalage P. Refactoring Databases: Evolutionary Database Design. Addison-Wesley, 2011.

- Beck K, Andres C. Extreme Programming Explained: Embrace Change. 2e. Addison-Wesley, 2004.

- Beck K, Fowler M. Planning Extreme Programming. Addison-Wesley, 2000.

- Cipriani A, Giri M. Electronic Music and Sound Design - Theory and Practice with Max/MSP. Contemponet, 2010.

- Cole L, Borba P. Deriving Refactorings for AspectJ: An Approach to Refactoring Aspect-oriented Applications Using Composed Programming Laws. VDM, 2011.

- Fowler M, et al. Refactoring: Improving the Design of Existing Code. Addison-Wesley, 1999.

- Kerievsky J. Refactoring to Patterns. Addison-Wesley, 2004.

- Kuldell N, Lerner N. Genome Refactoring. Morgan & Claypool, 2009.

- Laddad R. Aspect Oriented Refactoring. Addison-Wesley, 2008.

- Manzo V. Max/MSP/Jitter for Music: A Practical Guide to Developing Interactive Music Systems. Oxford Univ, 2011.

- Pytel C, Saleh T. Rails AntiPatterns: Best Practice Ruby on Rails Refactoring. Addison-Wesley, 2010.

- Wloka J. Tool-supported Refactoring of Aspect-oriented Programs: Why Aspect-oriented Programming Prevents Developers from Using Their Favorite Refactoring Tools, and How These Tools Can Be Made Aspect-aware. VDM, 2008.

- JRefactory at Sourceforge.net

No comments:

Post a Comment